20. Internal evolution in galaxies¶

While galaxies are shaped and reshaped by mergers and accretion as we discussed in Chapter 19, many galaxies—especially disk galaxies—evolve significantly through internal dynamical processes as well. Bars and spirals are not just visually fascinating morphological features, but they also drive large-scale flows of stars and gas that lead to star formation, nuclear activity, and wholesale changes to the structure of the galaxy. Internal evolution of this type is slow and steady, and changes the appearance of galaxies only over many dynamical times. Such evolution is often described as secular—a term from time-series analysis that indicates long-term upwards or downwards trends as opposed to cyclical variations—and the topic of internal galactic evolution is part of the broader galaxy-evolution subfield of secular evolution (Kormendy & Kennicutt 2004). Secular evolution, however, encompasses any slow, long-term evolution of galaxies, whether it be driven by internal processes or by external processes such as slow gas accretion. In this chapter, we focus on internal secular evolution.

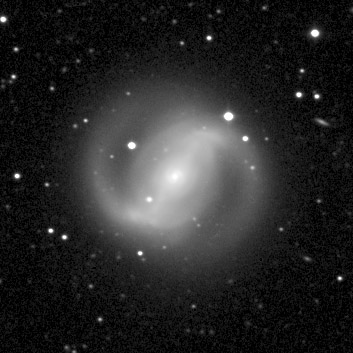

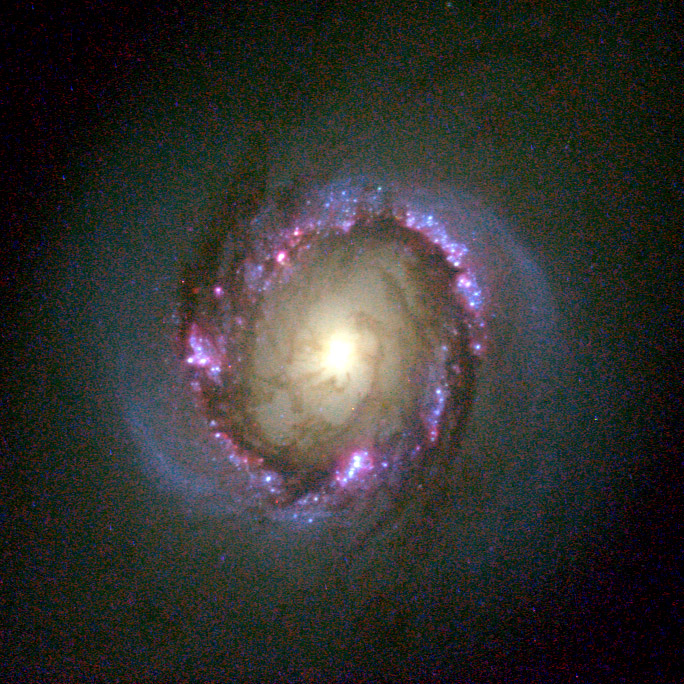

Bars are arguably the largest drivers of secular evolution in barred disk galaxies. Bars often dominate the central mass distribution in disk galaxies (e.g., the prominent bar in NGC 1300 in Figure 1.3) and create large torques on stars and gas in the disk. These torques drive gas inwards, fueling nuclear starbursts and creating prominent rings when gas is stalled at the dynamical resonances that we will discuss in this chapter. A good example of this is provided by NGC 4314, shown in Figure 20.1.

Figure 20.1: NGC 4314, a barred spiral (left; McDonald Observatory) with a prominent inner ring of star formation (right; Benedict et al. 2002 and NASA).

The panel on the left demonstrates that NGC 4314 has a prominent bar that dominates much of its disk. The panel on the right is a zoom in on the central region that displays a nuclear ring of young stars and star clusters, created from gas driven inwards by the bar. Bars similarly drive gas outwards, creating outer rings in the process. Spirals—present in almost all disk galaxies—drive similar gas flows; they also move stars to different orbits, significantly reshaping the stellar chemo-dynamical distribution over time.

Why do galaxies evolve rather than remain in equilibrium? As we stressed when we first discussed galactic equilibria in Chapter 5, galaxies are only in a quasi-equilibrium state. While like many physical systems, galaxies strive towards a state of maximum entropy, self-gravitating systems like galaxies do not have a maximum-entropy state. Instead, the maximum possible entropy is infinite (Antonov 1962a). Thus, no realistic self-gravitating system can be in a maximum-entropy state and all such systems are in the process of slowly evolving to higher-entropy configurations.

How galaxies evolve towards a higher-entropy state depends on the type of galaxy we are dealing with. For disk galaxies, much of the mass of the system is engaged in ordered rotational motion and the way to increase the entropy of the system is to convert this low-entropy ordered motion into high-entropy random motion (Lynden-Bell & Kalnajs 1972). This process unfolds in the following manner. Because internal dynamical evolution is slow, it is reasonable to assume that the galaxy approximately satisfies the scalar virial theorem from Equation (14.18) at all times. Combining this with the conservation of the total energy \(E = K + W\) of an isolated self-gravitating system, we have that \(E = -K = W/2 = \mathrm{constant}\). Kinetic and potential energy are, therefore, separately conserved and an increase in the contribution from random motion to the kinetic energy must be exactly cancelled by a decrease in the contribution from ordered rotation. The final constraint is that the total angular momentum \(L\) must also be conserved. Because \(L\) is essentially the product of the system’s size and of its typical rotational velocity, decreasing the kinetic energy in ordered motion while conserving \(L\) can be achieved by increasing the size of the system. However, the system cannot solely expand, because this would lead to a decrease in the potential energy \(W\). Thus, this decrease in \(W\) must be counteracted by a slight contraction of the core—only a slight contraction is necessary, because \(W\) is dominated by the core region. Therefore, if a disk galaxy can re-arrange its stellar distribution such that it contracts near the center, while expelling some mass towards large radii, it can move towards a higher-entropy state.

Spheroidal systems supported by random motions, such as elliptical galaxies, globular clusters, and dark-matter halos, evolve similarly to reach a higher-entropy state. Most of their kinetic energy is already in random motions, so converting ordered rotation into random motion has little effect, but changing the density and phase-space distribution can increase the entropy as well. The important physical insight here is that realistic self-gravitating galactic systems have negative specific heats. That is, when cooled, their temperatures increase. The definition of the temperature \(T\) of a self-gravitating system derives from its kinetic energy \(K\) through \(K = 3NkT/2\), where \(N\) is the number of bodies and \(k\) is the Boltzmann constant. In virial equilibrium, \(K = -E\), and the specific heat is \(C = \mathrm{d} E / \mathrm{d} T = -3Nk/2 < 0\) (Lynden-Bell & Wood 1968). Suppose then that the core of a self-gravitating system is slightly hotter than its outskirts. Heat will flow from the core to the outskirts, reducing the energy of the core, that is, making it more negative than it was before. The kinetic energy of the core and its temperature, therefore, increases, while the heat deposited in the outskirts causes those to cool. The core, then, becomes more tightly bound and more concentrated, while the outskirts expand. Thus, like disk galaxies, spheroidal systems aim to evolve to a state with an ever more concentrated core surrounded by an extended envelope.

Whether disk galaxies or spheroidal systems can actually evolve as described in the previous paragraphs over the age of the Universe depends on whether they have access to dynamical processes that reshape their phase-space distributions on this time scale. For disk galaxies, we have seen that they are close to axisymmetric and if they are exactly axisymmetric, then not only is the total angular momentum of the system conserved, but the \(z\)-component of the angular momentum of all individual stars is conserved as well. Contracting the core and expanding the outskirts is then not possible, because all bodies are forced to oscillate around their fixed guiding-center radii. However, if disk galaxies develop non-axisymmetric structures such as bars or spiral structure, these can transfer angular momentum and drive the necessary changes to the phase-space distribution (Lynden-Bell & Kalnajs 1972). This is why it is favorable for bars and spirals to form and why they are important drivers of internal galactic evolution. In Section 20.1, we will discuss under what circumstances disk galaxies are susceptible to bar or spiral-structure formation and in Sections 20.2 and 20.3, we then discuss the observational and orbital properties of bars and spiral structure, respectively, their long-term evolution, and their effect on the stellar and gas distributions within galaxies.

Spheroidal systems like elliptical galaxies are not susceptible to the formation of non-axisymmetric structures like bars or spirals (see Chapter 17.3.3). The only source for the required heat transfer then is two-body encounters, but as we discussed in Chapter 5.1, the two-body relaxation times for galaxies are orders of magnitudes longer than the age of the Universe. Heat transfer is, therefore, not efficient and the resultant internal secular dynamical evolution does not occur for elliptical galaxies or standard CDM dark-matter halos. We also saw in Chapter 5.1 that the two-body relaxation times in dense globular clusters are \(\approx 1\,\mathrm{Gyr}\) and two-body-relaxation-driven heat transfer does occur, leading to significant density evolution. In fact, the heat-transfer process we described above is a runaway process, because the heating of the core and the cooling of the outskirts increases the temperature difference between the two, causing more heat to flow from the core to the outskirts, contracting the core and increasing the temperature difference further. This runaway process is known as core collapse or the gravo-thermal catastrophe (Lynden-Bell & Wood 1968). Eventually, the collapse is halted at a finite central density through the formation of hard (tightly bound) binaries (Hills 1975). If dark matter has strong self-interactions, these can give rise to heat transfer in the dark-matter halo and a similar process of core collapse of the dark-matter distribution can occur (Balberg et al. 2002).

Thus, internal evolution mainly affects disk galaxies and it is disk galaxies on which we focus our attention in this chapter.